Archived - System Under Development Audit of Education Information System

Archived information

This Web page has been archived on the Web. Archived information is provided for reference, research or record keeping purposes. It is not subject to the Government of Canada Web Standards and has not been altered or updated since it was archived. Please contact us to request a format other than those available.

Date: November 2010

PDF Version (270 Kb, 37 Pages)

Table of Contents

- List of abbrs and technical definitions

- Executive summary

- 1. Background

- 2. Objective and scope

- 3. Statement of assurance

- 4. Methodology

- 5. Findings and recommendations

- 6. Conclusion

- 7. Management Action Plan

- Appendix A - Summary of key external dependencies

- Appendix B - Key elements of Education Information System success

- Appendix C - Business outcomes dependent on sufficient adoption and data sharing

List of abbrs and technical definitions

List of abbrs

List of technical definitions, as used in this report

Data Collection Instruments (DCI) - recipient reports that are part of the terms and conditions of various Contribution Agreements, representing the sole source of data collected for INAC-funded education programs.

Go-live - date on which EIS is moved to production (system is on-line, users are granted access and can create/submit proposals and run reports, and in-service support of EIS begins)

Integration - assembling system components (or subsystems) into a single system such that the subsystems function together and go on to operate as a single system

Interface - a point of interaction/communication between systems or system components, which may include both automated and manually-enabled data exchanges

Stabilization - transition period following go-live when the project team is ramped down and EIS operations are handed off

Executive summary

Background

Indian and Northern Affairs Canada (INAC or "the Department") spends approximately $1.7B per year on Aboriginal education programs and has primary responsibility over education for First Nations people on reserves. In May 2008, INAC's initiative to support foundational change in First Nations education was approved. A performance measurement system, termed the Education Information System (EIS), was identified as an essential tool to support this reform. EIS aims to support improved accountability for education programs, inform changes to policy and program development, and improve service delivery.

Funding of $26.6M for EIS system development and implementation and $450K for ongoing annual maintenance and support was approved for the project. Starting in 2008, external consultants were hired by INAC to manage the EIS project and develop the system. From 2008-2010, $5.5M was devoted to preliminary project planning and documentation, $0.8M of which was provided by existing Departmental funds. In June 2009, EIS was granted Preliminary Project Approval (PPA) for INAC's proposed EIS design. If Effective Project Approval (EPA) is granted, funding of $21.7M will be released to develop and implement the approved EIS design.

INAC's Audit and Evaluation Sector (AES) engaged Ernst & Young to conduct this audit of EIS while it was in its early pre-EPA developmental stages from June to October 2010. The audit results, which include the findings and recommendations in this report, are based on information made available to us for review between June and August 2010.

Objective and scope

The audit [Note 2] objective was to provide assurance that the EIS project is on track to deliver a system, processes and controls in September 2012 that will securely and reliably administer a comprehensive national education information resource for school/institution-based learning. The scope included the following three areas of risk identified during the planning phase of the audit:

- Implementation schedule, including implementation approach and timeline, project dependencies, planned interfaces, data cleansing and conversion activities, and risk management procedures.

- Education Information System feasibility, including project estimates (budgeting, resources plans, project quality estimates and total cost of ownership), "options-analysis", and strategic feasibility pertaining to performance measurement.

- Performance measurement outcomes, including alignment with INAC's education performance measurement strategy, stated outcomes, mandatory requirements and overall business case.

Methodology

A risk-based audit program was developed during the planning phase of the audit to focus on areas of greatest risk. The audit program was structured to include documentation reviews and interviews. All audit fieldwork was conducted at INAC national headquarters in Ottawa/Gatineau. The audit planning took place in June 2010, followed by the audit fieldwork from July to August 2010. Reporting took place from September to October 2010.

To allow INAC management to consider risks identified throughout the audit in a timely manner, we shared preliminary findings with INAC senior officials in mid-September 2010.

Findings and conclusions

Three audit findings were identified; to summarize, we found a lack of evidence to demonstrate that:

- Risks can be effectively mitigated under the planned project timeline using the single-release implementation approach selected through an "options analysis" process

- EIS plan is strategically (with respect to performance measurement), technologically and economically feasible with the proposed design, approach and "options-analysis" confirmed by an independent feasibility study

- EIS will result in the achievement of INAC's performance measurement objectives; specifically, that reliable and complete data will be in place and collected in a consistent manner to leverage the EIS technology being invested in and achieve the stated business outcomes

While the EIS project appears to be on track to deliver a system that consolidates data, provides electronic reporting and increases the maturity and experience of the IT function at INAC, the complex functionality being invested in to enable performance measurement cannot be leveraged without a Departmental ability to collect complete and reliable data. EIS will be unsuccessful if INAC lacks the tools and information to leverage the technology, even if the system is built according to the technical specifications. Moreover, without a complete understanding of the performance measurement requirements and data, EIS requirements and expectations are likely to evolve, creating significant risk for the project.

As a result of the audit findings, we cannot conclude that the EIS project is on track to deliver a system, processes and controls in September 2012 that will securely and reliably administer a comprehensive national education information resource for school/institution-based learning.

Recommendations

We recommend that INAC examine their performance measurement expectations and validate that reliable and complete data will be gathered in a consistent manner to appropriately leverage EIS technology, prior to investing in system capabilities. A timeline of expected performance measurement outcomes and an estimate of the complete cost of ownership should then be developed. Finally, the EIS implementation approach, timeline and mitigation strategies should be re-examined objectively and independently; this examination should validate that the project is appropriately aligned to Departmental and Treasury Board expectations and best-suited to the business requirements.

1. Background

Indian and Northern Affairs Canada (INAC) supports province-like social programs, including education, in First Nation communities. In support of its mandate, INAC has a legal responsibility to ensure that these services compare in quality to those available to other Canadians. [Note 3] The Department spends approximately $1.7B per year on Aboriginal education programs and has primary responsibility over education for First Nations people on reserves.[Note 4]

In May 2008, an initiative to support foundational change in First Nations education, entitled Reforming First Nations Education was approved. A performance measurement system, termed the Education Information System (EIS), was identified as an essential tool to support this reform. Funding of $26.6M for EIS system development and implementation, as well as $450K per year for its ongoing maintenance and support was dedicated to the project.

The EIS project is an effort to develop a comprehensive national education information system for school/institution-based learning, in which INAC and First Nations have a shared interest and responsibility. The new system aims to support improved accountability for education programs, inform changes to policy and program development, and improve service delivery.

The development of EIS is the one of the largest and most technically complex Information Technology (IT) projects the Department has undertaken. Starting in 2008, external consultants were hired by INAC to manage the EIS project and develop the system. From 2008-2010, $5.5M was devoted to preliminary project planning and documentation, $0.8M of which was provided by existing Departmental funds.

In June 2009, Preliminary Project Approval (PPA) for INAC's proposed EIS design was granted. According to the PPA TB submission, EIS will provide the opportunity to make a very significant contribution to understanding and improving education outcomes for First Nation students, improve the management of the education programs, and increase accountability by providing the following capabilities:

- Streamlining data collection forms, and using reporting information from all education programs to provide a complete picture of school results

- Storing data in a "mineable" format so that it can generate useful reports, both annually and longitudinally, using all reported information [Note 5]

- Using information from other databases to provide the context in which to meaningfully measure student outcomes (e.g., socio-economic, school infrastructure)

- Enabling timely reporting on results, based on performance indicators to be developed in discussion with First Nations and other stakeholders

- Reducing reporting burden by reducing redundancy and simplifying the reporting process

- Improving data quality by streamlining information-gathering enabling early detection of anomalies to trigger automatic review of reports

- Linking education program results with INAC's financial information to better assess expenditures relative to results

- Providing timely reports on program management as well as performance measurement

If EPA is granted, funding of $21.7M will be released to develop and implement the approved EIS design, scheduled for delivery in September 2012. The Audit and Evaluation Sector (AES) engaged Ernst & Young to conduct an audit of EIS while it was in its early developmental stages, prior to EPA.

To allow INAC management to consider risks identified throughout the audit in a timely manner, we shared preliminary findings with INAC senior officials in mid-September 2010.

This audit report provides further context and details the audit findings and recommendations.

2. Objective and scope

2.1 Objective

The objective of the audit was to provide assurance that the EIS project is on track to deliver a system, processes and controls that will securely and reliably administer a comprehensive national education information resource for school/institution-based learning.

2.2 Scope

The scope of the audit included the following broad areas:

- Risk management

- Project estimates

- Project requirements

This report is organized according to the following three areas of risk identified during the planning phase of the audit:

- Implementation schedule, including implementation approach, timeline to completion, project dependencies, planned interfaces, data conversion activities, data cleansing activities and risk management procedures.

- Education Information System feasibility, including project estimates (budgeting, resource plans, project quality estimates and total cost of EIS ownership), options-analysis and performance measurement strategic feasibility.

- Performance measurement outcomes, including alignment with INAC's "Umbrella Education Performance Measurement Strategy", stated outcomes, mandatory requirements and overall business case.

The observations and findings in each of the three main areas of risk above are detailed in Section 5.

2.3. Scope limitations

Given that the EIS project is in its early planning stages, with system development to commence following EPA, some areas of review had to be excluded. These included the review and testing of system controls, disaster recovery plans, and interfaces. We reviewed documentation available between June and August 2010, which included the detailed requirements, the EIS Business Case and Project Charter.

Due to the ongoing nature of the EIS project, we understand that some of the issues identified in this report may have already been identified and addressed with an action plan by the project team. The observations in this report are based on information available during the fieldwork phase of the audit. Relevant information provided in management meetings during the reporting phase of the audit is incorporated where appropriate to provide further context to the findings and recommendations in this report. However, no further fieldwork was performed during the reporting phase to examine new areas of project progress and development.

3. Statement of assurance

Sufficient work was performed and the necessary evidence was gathered to support the findings, recommendations and conclusions contained in this report. The work was conducted according to a risk-based audit program developed collaboratively with INAC AES.

The risk-based audit program was based on Control Objectives for Information and related Technology, version 4.1 (COBIT 4.1) and the Project Management Institute's Project Management Body of Knowledge, version 4 (PMI PMBOK 4).

The audit was executed in conformity with the Internal Auditing Standards of the Government of Canada. The audit procedures were also aligned to the TB Policy on Internal Audit and related policy instruments, as well as to the International Standards for the Professional Practice of Internal Auditing. This audit does not constitute an audit or review in accordance with any Generally Accepted Auditing Standards (GAAS).

4. Methodology

4.1 Approach and timeline

The audit was conducted in three distinct phases:

- Planning phase (June 2009)

- Conduct phase (July to August 2010)

- Reporting phase (September to October 2010)

Given the size, complexity, and the current developmental stage of the EIS project, we recognize the importance of timely feedback. Accordingly, we communicated preliminary observations, risks and recommendations to senior INAC officials in September 2010.

4.2 Audit approach

A risk-based audit program was developed during the planning phase of the audit to focus on areas of greatest risk. The audit program was structured to include documentation reviews and interviews. All audit fieldwork was conducted at INAC national headquarters in Ottawa/Gatineau.

Because the audit was conducted pre-EPA, prior to the commencement of system development, no testing was performed. We did leverage research and leading practices in the following areas to assess the project plans and develop the audit program:

- Performance measurement

- IT project implementation

- Data conversion

5. Findings and recommendations

EIS is an important tool to enable INAC's performance measurement objectives and to consolidate currently unmanageable educational reports in a "mineable" format. We recognize the strategic importance of this system to the Department and the inherent challenges in the field of performance measurement. We also recognize that significant work has been performed in the pre-EPA phase, with a considerable degree of project planning. We understand from the project team that over 4,500 planning documents have been developed. Several of the planning documents reviewed incorporate leading practices in project management.

This section identifies risks, gaps and areas of potential improvement to increase the probability of EIS success. The findings and recommendations are organized below according to the following three main areas of risk identified during the audit:

- Implementation schedule

- Education Information System feasibility

- Performance measurement outcomes

5.1 Implementation schedule

EIS is scheduled to go live in September 2012, a deadline that is considered imperative for the project. Specifically, because educational reports are tied to school years, the new system must be implemented at the start of the annual Departmental reporting period (September). If the target year is missed, the next viable window will be a year away. We were informed by the project team that the September 2012 target must be met because funds for project resources cannot be sustained until the next annual reporting cycle. INAC has also made public commitment to implement EIS at the start of the 2012 school year.

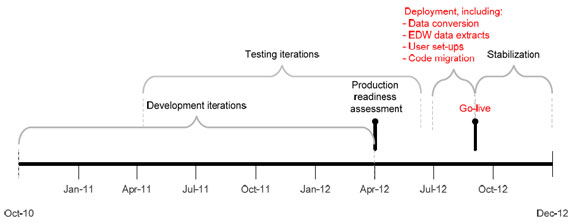

The EIS project team selected a single-release implementation approach, scheduled to go live in September 2012, as illustrated in Figure 1 below. The single-release implementation model introduces the finalized system into production at one time, as opposed to a phased model (where each implementation phase entails an "upgrade") or an evolutionary model (where a pilot, or partial implementation, is conducted prior to full implementation to mitigate risks).

Figure 1 - High-level project schedule [Note 6]

This graphic illustrates the EIS development cycle timeline in four-month intervals (from October 2010 to December 2012), indicating the key points in the cycle.

- October 2010-April 2012: development iteration period (period during which the project manager will periodically follow the project's progression, observe the progress made and express his/her concerns and preferences as the project moves forward).

- April 2011-June 2012: iteration testing period (period during which the project manager, in addition to carrying out development iteration, will assess production readiness. The production readiness assessment will take place in April 2012.

- July 2012-September 2012: system deployment.

- September 2012: system introduced into production (single-release implementation)- the system will be on line.

- September 2012-December 2012: transition period following system go-live (stabilization period).

- December 2012: end of project.

In phased and evolutionary implementations, results and "lessons learned" from production usage can feed into subsequent phases or releases. In contrast, a single-release implementation presents a finalized solution to an entire user base at once - similar to what is termed "big bang adoption" with respect to software implementations.

Big bang adoption is riskier than other adoption types because there are fewer learning opportunities incorporated in the approach, so significant preparation is required to get to the big bang. [Note 7] In addition to providing learning opportunities, phased implementations generally have the following advantages:

- Allow user feedback to be incorporated into the final design

- Provide further testing opportunities, including system integration testing

- Impart an easier transition period between legacy systems and the new system

- Make controlling the launch simpler

- Allow for early stakeholder buy-in

- Typically deliver a greater period of usability because the system can be released earlier

While the EIS Business Case uses the term pilot with respect to a production go-live in 2012, there is no evidence to demonstrate that this pilot will be used to leverage the above-listed advantages and learning opportunities. Rather, the Business Case suggests that deployment activities will begin in July 2012, with the system going live in September 2012 and stabilizing by December 2012.

Because EIS will be implemented in a single release, it is imperative that appropriate planning and testing has been conducted prior to going live, and that all critical issues are identified and addressed in advance of the go-live date. The following areas of risk associated with the implementation approach and schedule are discussed below:

- External dependencies

- Milestone scheduling

- Mitigation strategy

5.1.1 External dependencies

EIS is highly dependent on several factors outside of the project team's control. The following are aspects of the project that entail critical dependencies, which may determine whether the implementation targets and business outcomes are met:

- Areas for which EIS project deliverables depend on external systems, agencies or resources but that are to be funded by the EIS project budget:

- Indian Registration System (IRS) data exchange

- Resource transition between two separate procurement phases of resource contracting

- Enterprise Data Warehouse (EDW) data exchange

- Development of First Nations and Inuit Transfer Payment (FNITP) system integration

- Privacy constraints, which may create challenges regarding the aggregation of EIS data between multiple systems (e.g. , FNITP and EDW)

- Alignment with INAC's "Umbrella Education Performance Measurement Strategy "

- Regional resource shortages associated with data-cleansing activities

- Areas for which First Nations are primarily responsible and will assume any associated costs:

- First Nations' hardware and software, including the associated on-site technical support

- First Nations' Internet connectivity

- Areas for which INAC (outside of EIS project) is primarily responsible and will assume any associated costs:

- Cleansing legacy data

- Ongoing negotiations with First Nations for EIS adoption, including the method of use (i.e. paper-based, Portable Document Format or PDF, on-line data entry, electronic extracts mailed to INAC or local system uploads)

- Data entry for paper-based reporting into EIS

- Region-specific data collection instruments (DCI)

- Decommissioning of legacy systems

- Umbrella Education Performance Measurement Strategy

- Any FNITP problems that arise as a result of EIS integration and the associated increased load on the central HelpDesk

- Ongoing maintenance and support costs in excess of $450K annual funding, which was determined to be insufficient during project PPA phase; these costs include:

- Infrastructure and operations - software licenses, hardware, and maintenance

- Application support and architecture - hardware and software maintenance

- EDW - hardware and software maintenance

- Ongoing training - support infrastructure, processes and resources

- First Nation EIS power-users - travel and annual accommodations to receive training

- Data-sharing negotiations and establishment of documented educational data-sharing agreements with:

- Provinces

- First Nations

Our observations regarding the above-listed project dependencies are detailed in Appendix A, with associated risk assessments based on pre-EPA project plans.

Some of the external project dependencies directly determine whether mandatory requirements are met, such as decommissioning legacy systems, while others play an important role in determining whether the project remains on schedule and within budget, such as cleansing the legacy data prior to conversion.

Given the single-release implementation approach and the September 2012 go-live date, the reliance on external dependencies is an area of significant risk. While external dependencies are inevitable in any project, the following EIS project planning and scoping decisions have elevated the associated risks:

- Post-PPA introduction of FNITP system-integration requirement, which will account for a significant portion of project development resources and which relies on FNITP analysts and developers.

- Exclusion of the cleansing of legacy information prior to EIS conversion from project scope. Data cleansing is central to preventing erroneous records from becoming difficult to identify and correct. [Note 8]

- Exclusion of the decommissioning of legacy systems from project scope, despite listing decommissioning as a mandatory project requirement.

- Post-PPA removal of a stand-alone connectivity solution for First Nations communities without Internet access from the scope of the project, which will likely impact adoption rates and increase paper-based reporting.

- Strong dependencies on and EDW data, but no evidence to demonstrate data-sharing agreements with system owners, or to demonstrate plans to account for IRS development projects.

- Critical dependencies on data-sharing agreements with provinces and system adoption by First Nations, which are not within project teams' control (further examined in Section 5.3).

The EIS project team has developed strategies to mitigate risks associated with project dependencies; accordingly, $3.26M has been allocated to a contingency fund. However, there is a lack of evidence to demonstrate that the strategies and contingency fund are sufficient to address the cumulative effect of multiple risk events (for example, the combination of multiple regional offices requiring additional resources to cleanse legacy data, timeline delays, and a significant degree of paper-based reporting once EIS goes live). This is of considerable importance given the number of project dependencies and the reliance on Departmental resources and infrastructure to support a pioneering performance measurement system.

Due to the number of external dependencies, and the associated implications, the September 2012 go-live date requires adequate time buffers to allow for scheduling slippage outside of the project's control. However, as discussed in Section 5.1.2 below, there is a lack of evidence to demonstrate that the schedule includes sufficient buffering.

5.1.2 Milestone scheduling

Key milestones per the EPA project Business Case are illustrated below in Figure 2 with higher risk scheduling activities highlighted in red. Between July 2012 and September 2012, the project plans to conduct deployment activities, which include:

- Data conversion and transfer of information from legacy systems to EIS

- Data extracts from EIS to the EDW for reporting

- User security profile set-up

- Code migration into production

Figure 2 - High-level project schedule highlighting areas of higher risk in red [Note 9]

This graphic illustrates the EIS development cycle and project progression timeline, indicating the main points in the cycle and emphasizing the critical phase of the project (the critical deployment activities and EIS go-live).

- September 2012: the system is introduced into production at one time through high risk deployment activities such as data conversion, EDW data extraction, user set-ups and code migration.

One of the top reasons for government IT project failures is too little attention being paid to breaking project development steps into manageable work steps, as outlined in the United Kingdom Office of Government Commerce (OGC) leading practices. There is a lack of evidence to demonstrate that this risk of undertaking unmanageable project work steps prior to go-live is being effectively mitigated for the EIS project, particularly with respect to data conversion activities.

Specifically, within a three-month window prior to go-live, key tasks have little buffering to allow for project delays or unexpected events associated with external dependencies. This is particularly risky because several aspects of the data conversions from legacy systems are dependent on business owners, as detailed in Appendix A. Data conversion is a challenging aspect of the implementation, as the project team has highlighted in their risk analysis. However, there is a lack of evidence to demonstrate that the likelihood and impact of data conversion risks are "moderate", as qualified by the project team.

Data conversion and consolidation projects can impact organizational effectiveness and efficiency, which is especially true for project involving "legacy" databases. Based on research and leading practices, data conversion usually requires more time than anticipated. As a result, conversions are often rushed, resulting in the identification of problems after the conversion is complete and the data quality has already suffered. To create a proper timeline and list of conversion expectations, preliminary data-profiling and a data quality assessment are required. [Note 10] With data integrity and data cleansing outside of the EIS project scope, the planned conversions to commence in July 2012 represent a significant project risk, further amplified by typical program and operational resource unavailability over summer months.

5.1.3 Mitigation strategy

Interviews with management resulted in inconsistent information regarding the impact of missing the September 2012 go-live date and the mitigation strategy. In particular, subsequent to communicating preliminary audit findings during the reporting phase of the audit, we were informed that the go-live date could be delayed by up to one month with little to no impact on the project delivery. Following this one month delay, the implementation could proceed with paper-based reporting and manual data entry. This information differed from that provided during the fieldwork phase of the audit.

Furthermore, it is not evident how EIS can be successfully deployed following a month-long delay with paper-based manual data entry; specifically, paper-based reporting is currently highly time-consuming for regional offices and a key driver for this system. There is no evidence to demonstrate that the current resource shortage will be augmented in 2012 to accommodate a paper-based EIS system that meets the reporting timeliness objectives set forth in project plans.

In addition, the mitigation strategy communicated during the reporting phase of the audit involves the option of switching to a phased approach mid-development whereby components of the application would be deployed in advance of go-live, with the proposal/recipient and performance measurement reporting components being deployed in subsequent iterations (as opposed to one that "phases" over annual reporting cycles). No evidence of this potential plan was made available in interviews and documents (including the options-analysis documentation) during the fieldwork phase of the audit. At the time of the audit, the following was not evident:

- Why this type of phased approach was not considered sooner in project planning documentation

- Why management interviewed were not aware of this option or the timeline mitigation strategy

- Whether this type of phased approach is feasible and, if so, why it is not the planned approach

- How the change would be communicated to First Nations and the expected impact on stakeholder confidence

Finding #1: There exists a lack of evidence to demonstrate that risks can be effectively mitigated under the planned project timeline using a single-release implementation. Specifically, the following issues introduce a significant level of risk to INAC:

- No planned pilot to identify and resolve issues prior to national implementation

- Insufficient buffering between key project milestones, particularly data conversion

- No evidence to demonstrate that critical project dependencies will align with timeline

- Unclear mitigation strategy and consequences of delaying the project go-live date

Recommendation #1: In consultations with project stakeholders and the Education Performance Measurement Strategy team, assess whether preventive controls can be implemented to mitigate the risks. In particular, consider the following potential activities to manage the scheduling risks:

- Expand the EIS scope to include key external dependencies, particularly those for which INAC will eventually absorb the associated costs independent of the EIS project

- Re-examine the requirement for a September 2012 go-live date and consider doing the following, with appropriate adjustments to the project plan, budget and communication strategy:

- Inserting greater scheduling buffers between key milestones, particularly for data conversion

- Introducing a pilot implementation prior to the single-release go-live or a phased roll-out to identify and address critical issues prior to introducing the system to all users

5.2 Design feasibility

As previously discussed in Section 5.1, it is unclear whether the proposed schedule and go-live date are feasible. Similarly, there is a lack of evidence to demonstrate that the proposed design and implementation strategy are best-suited to INAC's business requirements. Because an independent feasibility study has not been conducted to synchronously assess the project from all relevant viewpoints (specifically, from technological, performance-measurement-strategic and economic perspectives), it is difficult to ascertain whether EIS can meet the stated objectives and whether the proposed implementation strategy is optimal in terms of risk management, resource efficiency and approach effectiveness. [Note 11]

The EIS project team started with a fixed budget and a fixed timeline, prior to finalizing the requirements and objectives for EIS. As a result, once analysis was performed to estimate cost and complexity for the requirements, the project scope was adjusted to conform to the budget and timeline constraints. Therefore, as previously discussed, many project dependencies are outside of the EIS project scope, but entail additional costs to INAC and represent areas of strategic risk to the EIS project. Three aspects of feasibility are examined in the following sections:

- Technological feasibility

- Performance measurement strategic feasibility

- Economic feasibility

5.2.1 Technological feasibility

The single-release implementation approach was selected by the project team based on an "options-analysis" process conducted prior to PPA that considered five options. During the PPA phase, the project team determined that the only solution capable of meeting business requirements was a "custom-built Web-forms on-line" solution. Two implementation strategies were considered for this design:

- Single-release implementation

- Multi-phased implementation (phased over annual reporting cycles)

During the PPA phase, the project team selected the single-release implementation approach using a team of consultants - a decision re-affirmed by project team consultants during the EPA phase.

While the Business Case states that a phased implementation would reduce risk and provide ability to learn and improve the system prior to final deployment, the team concluded that a multi-phased approach "would add little value and would introduce additional overhead by extending the length of the project to implement the same capabilities". However, the options-analysis made available for our review lacks information to justify the single-release implementation decision. Specifically:

- Analysis performed during the PPA phase does not consider potential costs outside of the project budget that would be incurred if the single release failed and required subsequent remediation

- Higher risks associated with a single release are omitted from the PPA comparative analyses

- EPA Business Case cost comparison omits cost estimates for a multi-phase solution because the approach is not considered to be a viable solution; therefore, the comparison reduces to an overview of the project teams' selection

- EPA options-analysis indicates that public commitments and stakeholder delivery expectations can only be realized through a single-release solution; however, there is no evidence to demonstrate that these constraints were considered when the single-release solution was originally selected during the PPA phase

- The project plans made available for review demonstrates why a custom-built Web solution was selected over the status quo, commercial off-the-shelf (COTS) and fee-for-service solutions, but does not indicate why a single-release was selected over a phased implementation

In addition, no evidence was available to demonstrate why a team of consultants (to be attained under various procurement instruments) was selected over a single third-party service provider for the development and implementation of the custom-built Web-forms on-line system

A second area of technological feasibility involves alignment with the Umbrella Education Performance Measurement Strategy. As further described in Section 5.3, if the business outcomes or requirements evolve, technical rework may be required, impacting EIS timeline and budget.

Due to the lack of information available, combined with the lack of an independent feasibility study, we were unable to validate that the implementation approach selected is optimal for INAC. More importantly, the lack of a high-level feasibility study to examine alignment between the EIS design and INAC's performance measurement strategic objectives creates significant enterprise risks for INAC.

5.2.2 Performance measurement strategic feasibility

As further discussed in Section 5.3, there is no evidence to demonstrate when, or if, all mandatory requirements and business outcomes will be met.

As well, there is no evidence to demonstrate what degree of electronic adoption by institutions is required for success. Specifically, the EIS system is being designed to accommodate paper-based reporting, which will require manual input at INAC regional offices. However, no evidence was made available to demonstrate what volume of manual input is feasible for INAC with current regional resources, regional operating budgets and project budget. While manual input is the current method of managing many educational reports, INAC has found that the volume of reporting results in inadequate analysis due to resource shortages. There was a lack of evidence available to demonstrate that the EIS hard-copy reports will be less time-consuming to enter into the EIS system than the current reports or that EIS adoption rates will be sufficient to reduce manual data entry.

A more significant aspect of EIS adoption involves data quality and data integrity, which are integral to performance measurement. We were informed of significant data integrity issues currently embedded in the current educational data at INAC. Interviews indicated that EIS will act as an incentive for First Nations and institutions to provide higher-quality data and will reduce manual data-entry errors, thus addressing the current data quality challenges. However, this approach is inherently dependent on EIS adoption.

If data integrity is impaired following EIS implementation due to a lack of adoption and a high volume of paper-based reporting and manual data entry, the reports will not be reliable. An unreliable system may diminish the incentive for users to provide high-quality data, thus perpetuating the cycle of poor information. Both data integrity and data completeness are fundamental to the achievement of INAC's strategic objectives and to the success of EIS and are discussed further in Section 5.3.

5.2.3 Economic feasibility

Several costs to INAC are omitted from the EIS scope (including cleansing legacy data and negotiating and documenting internal and external data-sharing agreements), which will increase the total project cost to the Department. While these costs may be reasonable and accepted by key stakeholders, there was no evidence available to demonstrate that they have all been estimated or taken into account in the EIS pre-EPA stage, as detailed in Appendix A.

Another area of cost concern involves data quality and integrity, which is an inherent challenge for performance measurement across several industries, including education. In particular, significant data integrity issues involving the current Nominal Roll system and educational data at INAC were reported in a 2009-10 internal evaluation and communicated in audit interviews. [Note 12] No evidence that the total cost associated with addressing data integrity issues, which includes both data cleansing and ongoing data quality maintenance, has been estimated within a reasonable degree of precision. Moreover, the uncertainties regarding the degree of system adoption discussed in Section 5.2.1 may increase these costs and lead to ongoing data-cleansing requirements.

As outlined in Section 5.1, there is a lack of evidence to demonstrate that the current mitigation strategies are sufficient to manage the high number of project dependencies and Departmental changes required to introduce EIS in a manner such that business outcomes are achieved.

5.2.4 Summary

In summary, we found no evidence to demonstrate that several aspects of project feasibility have been independently assessed with a Department-wide view of strategy, indirect costs and technology. Given the size and complexity of EIS, a feasibility study could mitigate risks by validating the following success indicators, in advance of significant resource and financial investment.

- Technological success factors:

- Single-release solution timeline and implementation approach are feasible and best-suited to the business requirements

- Performance measurement strategic success indicators:

- EIS development is aligned with INAC's performance measurement strategy for education, with adequate controls in place to maintain the alignment throughout EIS development and implementation

- Expected EIS adoption rates are quantified, measurable and achievable

- Reliability, integrity and completeness of data can be achieved and maintained to meet stated business outcomes

- Economic success factors:

- Potential outcomes of external dependencies and their cumulative effect can be effectively managed with project contingency fund

- All indirect costs associated with the project are quantified and agreed to by stakeholders

Based on pre-EPA project plans, we did not find sufficient evidence to ascertain the existence of the above-listed success indicators. To achieve a sufficiently broad perspective, the feasibility study should be conducted independently of the project team; this approach also avoids the possibility of a conflict of interest.

Finding #2: No independent feasibility study was conducted to assess the EIS implementation approach and to confirm results of the EIS options-analysis. In particular, given EIS size, complexity and number of project dependencies, there is a lack of evidence to demonstrate that the implementation plan is strategically (with respect to performance measurement), technologically and economically feasible.

Recommendation #2: INAC should assess, independently of the EIS project team, whether the EIS system design is strategically (with respect to performance measurement), technologically and economically feasible. The Department should estimate the total cost of EIS ownership, based on all business and system requirements and stated outcomes, within a reasonable degree of precision.

5.3 Performance measurement outcomes

EIS's success depends on its ability to support the Department's performance measurement objectives. Key elements of EIS success, beyondthe achievement of project timelines, budget, and technical specifications, are outlined in Appendix B.

Of the approximately 119,000 elementary and secondary school students who reside on reserves in Canada, approximately 60% attend schools on reserves and 40% attend off-reserve schools, the majority of which are under provincial authority. In addition to measuring performance for primary and secondary schools, EIS aims to measure performance for students attending post-secondary schools as well as for youth wage subsidy programs, SchoolNet sites, and cultural centres that are funded through INAC. The project intends to collect data according to 18 performance indicators specified by the Umbrella Education Performance Measurement Strategy group.

While EIS is being designed to meet the mandatory requirements for performance measurement, we have not found sufficient evidence to demonstrate that the data-related aspects of achieving the mandatory requirements can be accomplished. That is, while the system may have the technological capabilities to measure stated metrics, the business outcomes cannot be achieved if the data are not available and reliable to leverage these capabilities. This is particularly important given that two key performance indicators for EIS success are data integrity and data completeness, as stated in project plans made available for review. These aspects of EIS project success are discussed in the following sections, followed by a summary of progress toward stated business outcomes.

5.3.1 Data integrity

"Successful adoption" is considered a critical success factor throughout the documented project plans. To employ Departmental lessons learned from FNITP, where system adoption targets were not met, the EIS project devoted a significant portion of their preliminary funding to attaining better adoption rates. However, there is no evidence to demonstrate how adoption "success" is quantified; specifically, we found no evidence to demonstrate that First Nations "adoption" of EIS has been sufficiently defined to resolve the following varying degrees of adoption:

- Agreement to use the EIS system, including any associated hardware and software investments

- Agreement to share data pertaining to each of the key performance indicators

- Ability to track the key performance indicators consistently

- Method of reporting (for example, electronically on-line versus paper-based mail-ins)

To gather consistently high-quality data, user buy-in is essential. Given the current challenges around data integrity communicated in interviews and previous education evaluations, the uncertain adoption rates imply significant risk and impact the stated EIS business outcomes, as detailed further in Section 5.3.2 and in Appendix C.

Another risk associated with adoption involves the mandatory requirement to reduce data-entry workload for First Nations, regions and headquarters. This requirement is dependent on adoption and the degree of paper-based reporting; therefore, without quantified adoption targets, there is a lack of evidence to demonstrate this will be achieved. Other mandatory requirements and their dependence on data completeness are discussed in Section 5.3.2 below.

A further aspect of data integrity involves EIS data exchanges with the IRS. A mandatory EIS requirement is to verify the student's IRS number at data entry, including matches on birthday and last name. We have noted several data integrity issues regarding the IRS in an ongoing audit of the Secure Certificate of Indian Status (SCIS). Therefore, while the data validation with EIS may provide an opportunity to improve data consistency across systems, there may be significant operational challenges to overcome, particularly with a planned upcoming IRS development initiative. While not directly in support of findings and recommendations in this report, we recommend that data-sharing agreements are established with IRS owners without delay and that appropriate escalation protocols are established and documented to manage data differences in a timely manner.

5.3.2 Data completeness

In addition to hindering data integrity, low adoption rates may impact data completeness. Specifically, subsets of data supporting the 18 performance indicators specified by the Umbrella Education Performance Measurement Strategy were split by the program into three tiers as follows:

- Tier 1: data currently collected by the Department

- Tier 2: data to be collected and analysed with the implementation of the EIS in September 2012

- Tier 3: data requiring further analysis regarding data collection (based on interviews, we understand that Tier 3 data is that which INAC would like to collect, but has not yet determined how to do so or has not yet attained stakeholder agreement to provide this data)

Of 45 subsets of data supporting the performance indicators, 20 are in tiers 2 and 3. There is a lack of evidence to demonstrate that Tier 2 data will be effectively collected by institutions that do not adopt EIS when the system goes live in September 2012. Similarly, even if INAC determines how to collect Tier 3 data in the future, there is a lack of evidence to demonstrate it can be effectively collected from institutions that do not adopt EIS.

It is unclear what percentage of institutions and percentage of students must be tracked by EIS to achieve the requirements and fulfill the project objectives. Provincial data-sharing agreements are not within the scope of the EIS project and are not expected to be in place when EIS is implemented. Provincial institutions encompass approximately 40% of the primary and secondary student population that INAC seeks to track. This directly impacts INAC's ability to meet mandatory requirements listed in the EIS requirements document.

In addition to challenges with data being available and reliable, there was a lack of evidence to demonstrate how students would be individually tracked. The EIS project plans to leverage the IRS registration numbers assigned to Status Indians to track students; however, non-Status Indians, including Inuit, are not registered in the IRS. While plans for a unique student identifier are discussed in project documentation, there was no evidence available to demonstrate how such a tracking mechanism would operate (including how and when students are assigned a number).

While mandatory requirements may pertain specifically to system functionality, the business outcomes listed in the EIS requirements document are dependent on requirements being met at both a system-functionality level and at the programmatic level. That is, it is not sufficient that EIS be technologically capable of meeting requirements; the overarching education program must have the means to leverage this technology with complete, high-quality data to provide reliable and timely reports.

For example, a mandatory requirement is to track students and standardized test results between schools, both on and off reserve. However, there is no evidence to demonstrate that this can be measured (and the requirement met) if data is not gathered from all institutions, including provincial institutions. Because this data is currently not available, there is no evidence to demonstrate this requirement can be met without data-sharing agreements, sufficient EIS adoption and an ability to track individual students. Similarly, there is a high likelihood that the following mandatory requirements will not be met without sufficient data completeness:

- Record when a student graduates upon completion of their program, both secondary and post-secondary levels, and record if there was employment as a result

- Track students through all education related programs; seamlessly track student's history from elementary to secondary to post-secondary, including Special Education needs, Youth Employment and any other education related programs

- Report on students, including history, programs of study, full-time/part-time status, type of institute and degree programs (available for the students/by student)

- Obtain provincial education curriculum approval certification information

The impact of not meeting these mandatory requirements on business outcomes is outlined in Table 1 below and further detailed in Appendix C.

Table 1 - Business outcomes impacted by data completeness and integrity, which depend on provincial data sharing and EIS adoption rates (further detailed in Appendix C)

Business outcomes at risk of not being met due to a lack of data completeness and integrity

Very high likelihood of outcome not being achieved (no evidence outcome can be met)

Very high likelihood of outcome not being achieved (no evidence outcome can be met)

High likelihood of outcome not being achieved (achievement unlikely)

High likelihood of outcome not being achieved (achievement unlikely)

Moderate likelihood of outcome not being achieved (unknown likelihood of achievement)

Moderate likelihood of outcome not being achieved (unknown likelihood of achievement)

| Provide a complete picture of all education program results by school and community (where privacy permits), by region and nationally, as well as by geographic zone. |  |

|---|---|

| Provide the context to meaningfully measure student outcomes (e.g. socio-economic, school infrastructure), which is not currently possible. |  |

| Track students and cohorts over time from kindergarten through secondary school and potentially through post-secondary education, which is not currently possible. |  |

| Implement INAC's First Nation education performance measurement strategy to provide more information on results, and contribute towards informed improvement and increased accountability for both INAC and First Nations. |  |

| Improve management of the education programs and increase accountability by reducing the reporting burden, simplifying the reporting process, and providing timely reports on program management and performance measurement. |  |

| Improve data quality. |  |

Data availability and integrity are critically important; without end-user buy-in, EIS reports may become unreliable with entire student populations omitted from comparative analyses. Based on performance measurement research, the relationship between inputs and outputs is generally easy to measure, whereas the relationship between inputs and outcomes is much more challenging[Note 13]. It is not evident that performance can be effectively measured without a representative population of students tracked. For instance, students that transfer between schools provincial and band-operated schools and that move on and off reserves may skew performance indicator results, as it would be unknown whether students dropped out or not.

Inconsistent awareness of performance measurement objectives was observed in interviews; specifically, management had differing expectations for EIS usage following implementation. While the system functionality expectations were communicated consistently, the expected ability and associated timeline for leveraging the functionality (in terms of usable reports) was unclear.

5.3.3 Summary

If it is determined that mandatory requirements and business outcomes cannot be met, at any point in the project development lifecycle, we would expect to find a reassessment of the project, objectives and proposed design. Specifically, it should be determined whether INAC's performance measurement goals can be met with partial student populations, and what degree of system adoption is required to successfully measure educational performance (i.e. statistical relevance). If the original business outcomes and mandatory requirements are considered essential to the Department, we would, similarly, expect to find further investments being considered to address these external dependencies. There was no evidence available to demonstrate that this analysis was conducted or that the mandatory requirements and business outcomes were revised to reflect data constraints.

A broad area of concern noted throughout audit interviews was the evolution of performance measurement requirements and the inconsistent expectations for EIS usage. Effective performance measurement is dependent on consistent reporting; trends can only be established if historical data is reliable and captured consistently on a periodic basis. Therefore, it is important that the data be of primary focus; data availability, reliability and expected usage should be well understood prior to developing a system. There was a lack of evidence available to demonstrate that the EIS plans are founded on stable performance measurement requirements and expected outcomes. The OGC indicates that a lack of co-ordination between project teams and departmental strategic objectives is the first of eight root causes for government IT project failures, highlighting the relevance of these strategic alignment risks.

In summary, it is not evident what performance measurement requirements can be met under the current project plans. While EIS appears to be on track to deliver a system that consolidates data, provides electronic reporting and increases the maturity and experience of the IT function at INAC, the complex functionality being invested in to enable performance measurement may not be leveraged without a programmatic ability to collect complete and reliable data.

Finding #3: There is a lack of evidence to demonstrate that EIS will result in the achievement of INAC's performance measurement objectives. While the technological capabilities may be in place to meet EIS requirements, there is no evidence to demonstrate that reliable and complete data will be in place and collected to leverage the technology and achieve the business outcomes.

Recommendation #3: In conjunction with Recommendation #2, INAC should clearly define what adoption rates and data are required to meet the mandatory requirements and stated business outcomes. Focus should be placed on the ability to collect reliable data prior to designing a system to house the data. Specifically, the performance measurement outcomes and associated timelines should be clearly defined to prevent overspend or rework on a system that cannot be effectively leveraged within a relevant timeline.

6. Conclusion

We cannot conclude that the EIS project is on track to deliver a system, processes and controls that will securely and reliably administer a comprehensive national education information resource.

Given the importance, size and complexity of EIS, we found a lack of evidence to demonstrate that the proposed EIS design and implementation strategy are feasible given the constraints and external dependencies. More important, there was no evidence available to demonstrate how the project will achieve the mandatory requirements and business outcomes, enabling the Department's performance measurement objectives. In particular, without data-sharing agreements with provinces and specified adoption targets by First Nations institutions, there is a high-likelihood risk that EIS will not meet mandatory project requirements and performance measurement objectives.

While EIS appears to be on track to deliver a system that consolidates data, provides electronic reporting and increases the maturity and experience of the IT function at INAC, the complex functionality being invested in to enable performance measurement may not be leveraged without a programmatic ability to collect complete and reliable data. EIS will be unsuccessful if INAC lacks the tools and information to leverage the technology, even if the system is built according to the technical specifications. Moreover, without a complete understanding of the performance measurement requirements and data, EIS requirements and expectations are likely to evolve, creating significant risk for the project.

We recommend that INAC examine their performance measurement expectations and validate that reliable and complete data will be gathered in a consistent manner to appropriately leverage EIS technology prior to investing in system capabilities. A timeline of expected performance measurement outcomes and an estimate of the complete cost of ownership should then be developed. Finally, the EIS implementation approach, timeline and mitigation strategy should be re-examined independently of the project to validate that they are appropriately aligned to Departmental and TB expectations and best-suited to the business requirements.

7. Management Action Plan

INAC's Audit and Evaluation Sector (AES) engaged Ernest and Young to conduct an audit of EIS while it was in its early pre-Effective Project Approval (EPA) development stages. The audit was conducted when the EPA Submission documents and associated project deliverables were being completed and progress relevant to the Audit findings has been made since, including approval by the Treasury Board Ministers. The management response reflects the progress the project has made since the documents reviewed by the Audit Team were drafted.

Based on the Audit Findings, the Audit Report made three (3) specific recommendations that are addressed below.

Table 2: Audit Recommendations

| # | Audit Recommendations | Action Plan | ||

|---|---|---|---|---|

| Plan | Date | Owner | ||

| 1. |

In consultations with project stakeholders and the Education Performance Measurement Strategy team, assess whether preventive controls

can be implemented to mitigate the risks. In particular, consider the following potential activities to manage the scheduling risks:

|

The preventive controls recommended by the audit to mitigate scheduling related risks were identified and addressed during the finalization of the EPA Submission.

Key related activities during the EPA finalization process included:

|

||

|

Additionally the project will:

1. Develop a data cleansing plan based on the current conversion strategy that details roles/responsibilities, timelines and costs and is signed-off by impacted stakeholder groups |

2011-03-31 | DG-Educ | ||

2. Develop an implementation strategy based on the current implementation approach that:

|

2011-03-31 | CIO | ||

| 2. | INAC should assess, independently of the EIS project team, whether the EIS system design is strategically (with respect to performance measurement), technologically and economically feasible. The Department should estimate the total cost of EIS ownership based on all business and system requirements and stated outcomes, within a reasonable degree of precision. |

The recent completion of the EPA submission and approval by Senior INAC management and the Treasury Board provided the project with a review of technological, strategic and economic alignment. In order to optimize additional review opportunities the project will ensure the two (2) independent validation and verification reviews that have been built into the project schedule include assessment activities to determine if the project is still on track and has adequately addressed the key audit findings.

|

||

|

Additionally the project will undertake the following actions:

1. Update the project forecast and identify project dependencies that can be funded by the Project |

2010-11-30 | DG-Educ ADM - | ||

| 2. Confirm funding source for remaining dependencies | 2010-12-31 | ESDPP /CIO | ||

| 3. Adjust the project plan to accommodate any shortfall related to the funding of project dependency activities | 2011-02-28 | DG-Educ | ||

| 3. | In conjunction with Recommendation #2, INAC should clearly define what adoption rates and data are required to meet the mandatory requirements and stated business outcomes. Focus should be placed on the ability to collect reliable data prior to designing a system to house the data. Specifically, the performance measurement outcomes and associated timelines should be clearly defined to prevent overspend or rework on a system that cannot be effectively leveraged within a relevant timeline. |

The project is actively addressing the ability to collect reliable data and will continue to address the related concerns by:

1. Obtaining Departmental approval of Education's Performance Measurement Strategy |

2010-11-30 | DG-Educ |

| 2. Identifying key success criteria including adoption rates | 2010-12-31 | DG-Educ | ||

| 3. Finalizing the current data mapping activities (tracing the availability of data required to support the planned performance measures) | 2010-12-31 | DG-Educ | ||

|

4. Finalizing the changes to Data Collection Instrument's required to support ESI performance measurement.

Notes:

|

2010-12-31 | DG-Educ | ||

Appendix A - Summary of key external dependencies

This Appendix lists critical EIS project dependencies discussed in Section 5.1 and 5.2, ordered by the party responsible for the majority of associated costs (i.e. the "owner"). The audit observations and risks pertaining to each dependency are detailed in the table below, as well as a risk likelihood assessment pertaining to the following project areas of constraint:

- Implementing EIS within project delivery schedule ("schedule delays" assessed below)

- Remaining within project budget ("additional costs" assessed below – these include budget overruns as well as costs to INAC and First Nations outside of EIS scope)

- Achievement of mandatory requirements and business outcomes ("quality impaired" assessed below)

To summarize, we have identified 14 external dependencies which create risks in the three main areas of project constraint: schedule, cost and quality. Six of the dependencies are "owned" by the EIS project, while the remaining eight are the responsibility of First Nations and INAC business owners. Based on current project plans, several factors outside of the project teams' control may impact project success; in addition, the project plans entail additional costs to the Department that are not included in the project budget.

| Dependency | Owner | Observations, risks and reasoning for likelihood assessment | Likelihood assessment | ||

|---|---|---|---|---|---|

| Schedule delays |

Additional costs |

Quality impaired |

|||

| IRS data exchange | EIS |

|

High | Moderate | High |

| Alignment with Education Performance Measurement Strategy | EIS |

|

High | Moderate | High |

| Development of FNITP system integration (result of changes to project plan following PPA) | EIS and INAC |

|

High | Moderate | Moderate |

| Resource transition between 2 separate procurement phases ("tiers") of contracting | EIS |

|

High | Moderate | Low |

| EDW data exchange | EIS |

|

High | Moderate | Low |

| Privacy constraints | EIS |

|

Moderate | Moderate | Moderate |

| First Nations' hardware and software | First Nations |

|

Moderate | High | Moderate |

| First Nations' Internet connectivity | First Nations |

|

Low | Moderate | High |

| First Nations adoption of EIS, including methods of use | First Nations and INAC |

|

Low | High | High |

| Data cleansing | INAC |

|

High | High | High |

| Region-specific DCIs | INAC |

|

Moderate | Moderate | High |

| Data-sharing negotiations and establishment of documented data-sharing agreements | INAC |

|

Moderate | Moderate | High |

| Data entry for paper-based reporting into EIS | INAC |

|

Not applicable* | Moderate | High |

| Ongoing support costs in excess of $450K annual funding - determined to be inadequate during PPA phase | INAC |

|

Not applicable* | High | Moderate |

| Decommissioning of legacy systems | INAC |

|

Not applicable* | Low | High |

* Considered "not applicable" to project delivery schedule because activities are to begin following project delivery target date.

Appendix B - Key elements of Education Information System success

The success of EIS involves not only IT project success, which can be defined as delivering a system to specification, but also an organizational ability to leverage the technology and achieve strategic objectives related to performance measurement. The below are criteria we consider to be essential EIS success factors (beyond the achievement of project timelines, budget, and technical specifications) based on the information made available during the audit.

- EIS usage by INAC and First Nations

- Data completeness

- Data integrity

- Data security

- Usage of EIS information to inform program and policy direction and First Nation school improvement planning

- Reduction in work burden

- Alignment and effective support of INAC's Umbrella Education Performance Measurement Strategy

- Ability to effectively measure the 18 education performance indicators specified by the Umbrella Education Performance Measurement Strategy

- Effective support of INAC's commitment in the Department's 2009-10 Report on Plans and Priorities to:

- Develop robust performance indicators that focus on outcomes

- Strengthen information and resource management capacities in direct support of strategic outcome planning and enhanced stewardship of resources

- Provide a complete picture of all education program results by school, community, region, geographic zone, and nationally

- Provide the context to meaningfully measure student outcomes (e.g. socio-economic, school infrastructure)

- Track students and cohorts over time from kindergarten through secondary school and potentially through post-secondary education

- Implement INAC's First Nation education performance measurement strategy to provide more information on results, and contribute towards informed improvement and increased accountability for both INAC and First Nations

- Improve management of the education programs and increase accountability by:

- Reducing reporting burden by minimizing redundancy and simplifying the reporting process

- Improving data quality by streamlining information-gathering

- Enabling early detection of anomalies to trigger automatic review of reports

- Linking education program results with INAC's financial information to better assess expenditures relative to results

- Providing timely reports on program management as well as performance measurement

- Improve data quality

- Work with First Nations to develop performance indicators that will be the basis for determining enhanced data requirements for EIS

- Support auditing functions to log activity (who, what and when) in accessing EIS information

- Improve the ability to train new users on an ongoing basis, and to train users on system changes

- Make EIS user-friendly to encourage First Nations usage of the system

- Accommodate varying levels of uptake by First Nations by providing user interfaces and data capture alternatives that reflect the varying levels of connectivity, user computer skills and comfort with the technology, thus reducing training requirements and minimizing technical and help-desk support

Appendix C - Business outcomes dependent on sufficient adoption and data sharing

The Table below provides further details pertaining to the business outcomes (outlined in Table 1 of Section 5.3) that are dependent on mandatory requirements that may not be met. Specifically, without sufficient data sharing with First Nations and provincial schools, there is a lack of evidence to demonstrate that EIS is on track to achieve these outcomes. While the system may be designed to provide the required technological capabilities, the business outcomes cannot be achieved unless data is available to leverage these system capabilities.

Legend (based on pre-EPA status and project plans):

Business outcomes at risk of not being met due to a lack of data completeness and integrity

Very high likelihood of outcome not being achieved (no evidence outcome can be met)

Very high likelihood of outcome not being achieved (no evidence outcome can be met)

High likelihood of outcome not being achieved (achievement unlikely)

High likelihood of outcome not being achieved (achievement unlikely)

Moderate likelihood of outcome not being achieved (unknown likelihood of achievement)

Moderate likelihood of outcome not being achieved (unknown likelihood of achievement)

| Business outcome | Observation and assessment | |

|---|---|---|

| Provide a complete picture of all education program results by school and community (where privacy permits), by region and nationally, as well as by geographic zone. |

Dependent on data-sharing with all educational institutions, including provincial institutions. In addition, certain First Nations have negotiated special agreements or self-government. In some cases, they do not have the same reporting obligations (either the same level of detail or to report at all) on certain areas of the education program. This may also hinder the ability to provide a "complete" picture, in line with the business outcome. |

|

| Provide the context to meaningfully measure student outcomes (e.g. socio-economic, school infrastructure), which is not currently possible. | Dependent on advanced performance measurement techniques, as well as on the completeness and reliability of data, impacted by First Nation adoption rates and provincial data sharing. |  |

| Track students and cohorts over time from kindergarten through secondary school and potentially through post-secondary education, which is not currently possible. | Dependent on completeness and integrity of data, which depend on First Nation adoption rates and provincial data-sharing, as well as on the capability to track students (and cohorts) who migrate on and off reserves. |  |

| Implement INAC's First Nation education performance measurement strategy to provide more information on results, and contribute towards informed improvement and increased accountability for both INAC and First Nations. | Dependent on completeness and integrity of data, which depend on First Nation adoption rates and provincial data-sharing. |  |

| Improve management of the education programs and increase accountability by reducing the reporting burden, simplifying the reporting process, and providing timely reports on program management and performance measurement. | Dependent on adoption rates and methods of data collection (paper-based, PDF, on-line data entry, electronic extracts mailed to INAC or local system uploads). |  |

| Improve data quality. | Dependent on adoption rates and methods of data collection (paper-based, PDF, on-line data entry, electronic extracts mailed to INAC or local system uploads), as well as on effective data cleansing. |  |

Footnotes:

- Also referred to as "the Department" in this report (return to source paragraph)

- The audit was executed in conformity with the requirements of the Treasury Board Policy on Internal Audit requirements and followed the Institute of Internal Auditors' Standards for the Professional Practice of Internal Auditing. It does not constitute an audit or review in accordance with any Generally Accepted Auditing Standards (GAAS). (return to source paragraph)

- INAC Social Programs (return to source paragraph)

- INAC Education (return to source paragraph)

- Based on interview, this capability refers to storing data such that users can generate reports on annual results as well as on historical trends for cross-sectional populations (e.g. student cohorts) (return to source paragraph)

- Timeline is as described in the EIS Business Case (return to source paragraph)

- K. Eason (1998), "Information technology and organisational change", Taylor & Francis., M. A. Ardis, B. L. Marcolin (2001), "Diffusing Software Product and Process Innovations", Kluwer Academic Publishers. Organisation for Economic Co-operation and Development (2007), "Performance Budgeting in OECD countries". (return to source paragraph)

- A. Maydanchik (1997), "Data Quality Assessment (from the Data Quality for Practitioners Series)", Technics Publications LLC (return to source paragraph)

- Timeline is as described in the EIS Business Case (return to source paragraph)

- A. Maydanchik (1997), "Data Quality Assessment (from the Data Quality for Practitioners Series)", Technics Publications LLC (return to source paragraph)

- H. Kerzner (2004) "Advanced project management: best practices on implementation" John Wiley and Sons. (return to source paragraph)

- "Formative Evaluation of the Elementary/Secondary Education Program On Reserve" (return to source paragraph)

- Institute of Public Administration of Canada (IPAC) 2008 (return to source paragraph)