Archived - RMAF Special Study

Archived information

This Web page has been archived on the Web. Archived information is provided for reference, research or record keeping purposes. It is not subject to the Government of Canada Web Standards and has not been altered or updated since it was archived. Please contact us to request a format other than those available.

Date: August 13, 2008

PDF Version (176 Kb, 33 pages)

Table of Contents

- Executive Summary

- Chapter One: Introduction & Methodology

- Chapter Two: RMAF Quality and Completeness

- Chapter Three: Status of RMAF Implementation

- Chapter Four: Conclusion

- Recommendations

- Areas for Further Investigation

- Appendix A: RMAF Special Study Terms of Reference

- Appendix B: Treasury Board Secretariat RMAF Guidelines

- Appendix C: RMAF Review Template

- Appendix D: RMAF Special Study Survey to Program Managers on Data Collection

- Appendix E: Treasury Board Submission Inventory (available upon request)

- Appendix F: RMAF Inventory by Strategic Outcome (available upon request)

- Appendix G: Survey Responses (available upon request)

- Appendix H: Complete List of Indicators (from the surveys) (available upon request)

Executive Summary

Following the introduction of Results for Canadians in 2000, programs seeking approval or renewal through Treasury Board have been required to submit a Results-Based Management Accountability Framework (RMAF). These RMAFs are intended as a tool, allowing managers to think through their programs from a results-based perspective and to clearly set out plans (and responsibilities) for performance measurement, evaluations, and reporting.

Until now, no formal tracking of the RMAFs developed for programs at Indian and Northern Affairs Canada (INAC) has been undertaken. The objective of this study is to present a portrait of the current state of results-based management at INAC by examining the quality, coverage, and level of implementation of RMAFs in the department. This project was carried out in four steps. First, an inventory of RMAFs in the department was done, with a total of 59 RMAFs found. Second, each RMAF was assessed and scored using a template developed from Treasury Board criteria. Third, they were mapped out against INAC's Program Activity Architecture (PAA) to determine RMAF coverage across each departmental Strategic Outcome area. Finally, a survey was developed and distributed to program managers to determine the extent to which RMAFs, particularly the data collection and reporting plans they contain, are being implemented.

This study found that RMAFs developed at INAC are generally of high quality. However, several key areas for improvement were also identified. In general, each assessed RMAF clearly laid out objectives, expected results, and a logic model in accordance with Treasury Board criteria. While also generally acceptable, the evaluation plans of many RMAFs lacked solid data collection information and other key details on the evaluation plan, including issues and proposed methodologies. In many cases, an overwhelming number of performance indicators, often output-focused (a burden for both program managers and First Nations to collect), sometimes took the place of a few key indicators that clearly linked activities to outputs and outcomes. Finally, responsibility for collecting and reporting also needs to be more clearly identified. Improvement in these areas would help RMAFs be a more useful tool for managers.

It was found that data collection and RMAF implementation is comparatively more problematic than RMAF quality. Results from the surveys to managers indicate that data is being collected for approximately 42 per cent of the performance indicators listed in RMAFs. Issues with data collection ranged from indicators being reassessed for their usefulness during the course of the program to more serious problems, including a lack of capacity (in both program recipients and program managers) to collect, report, and analyze data. Other issues in data collection may be linked to a problem identified earlier: too many indicators are listed for measurement, many of which are output-focused. This study found no areas of significant overlap in data collection, and as such identified no areas where duplication could be eliminated.

Chapter One: Introduction and Methodology

Over the past several years, the Treasury Board has introduced the requirement that a Results-Based Management Accountability Framework (RMAF) be submitted for most programs seeking Treasury Board approval or renewal. Although the programs of Indian and Northern Affairs Canada (INAC) have drafted RMAFs to meet this requirement as their funding authorities have come up for Treasury Board renewal, no monitoring mechanism has ever been established to track the development, approval, and implementation of RMAFs department-wide. As such, the Audit and Evaluation Sector conceived of this RMAF Special Study project in the spring of 2008 to develop a more complete picture of RMAF quality and implementation. The project was assigned our group of eight interns.

The RMAF Special Study Terms of Reference (Appendix A), developed by our team in collaboration with the management of the Audit and Evaluation Sector, and approved by the Audit and Evaluation Committee on June 27th, 2008, listed four steps to the RMAF Special study. Briefly, these steps were:

- Construct an inventory of all INAC RMAFs approved by the Treasury Board since the year 2000.

- Determine the position of each RMAF within INAC's Program Activity Architecture (PAA).

- Assess the quality and completeness of each RMAF.

- Determine to what extent each RMAF has been implemented, with particular focus on Performance Measurement Plans (PMPs).

For our first step, our group reviewed every one of the approximately 350 INAC Treasury Board submissions approved from January, 2000 through April, 2008. Through this process, we initially identified 62 RMAFs and similar documents. A record of this phase of the project is included in Appendix E. Then we narrowed the focus to 49 that are directly and primarily applicable to INAC programs. Some of the initial 62 were excluded, for instance, because they were primarily written by and/or the responsibility of another department.

We handled steps two and three simultaneously. Each of the 49 RMAFs was reviewed individually by two members of our team. Reviewers identified the Strategic Outcome, Program Activity, and Sub-Activity for each RMAF according to INAC's 2009-2010 Program Activity Architecture, and evaluated the quality and completeness of each RMAF.

For the evaluation process, our group developed nine assessment categories based on the Treasury Board Secretariat RMAF guidelines. Each RMAF received a score between one and three in each category depending on the extent to which it conformed to the guidelines. The Treasury Board Secretariat RMAF guidelines are attached as Appendix B and a blank RMAF assessment template is attached as Appendix C.

Our team defined a three point scale for each category as follows:

- 1/3: The component is either missing entirely or is missing several key elements.

- 2/3: The Evaluation Plan generally meets most of the minimum requirements but is in need of improvement (e.g. specific required details are absent, component exists but is unclear).

- 3/3: The component is clear, logical, and meets all requirements [Note 1].

The nine scores were then added to give each RMAF a total score out of a maximum possible 27 points. Once all reviewers had finished their individual evaluations, each pair of reviewers for each RMAF compared their results, discussed differences, and agreed to a final single set of PAA information, scores, and comments. The "Merged RMAF Assessment PMF#" column in the table included in Appendix F contains PMF numbers for completed evaluation templates containing individual RMAF's scores. The final PAA information for each RMAF can be found in the same table.

Finally, for the fourth step, our team developed a Program Manager Survey to determine the extent to which RMAF PMPs are being implemented at INAC. The most important aspect of the survey was a table with room for program managers to indicate which and how often data indicators listed in the RMAF are still being collected, as well as how collected indicators are being used. Additional survey questions asked whether or not the data being collected was sufficient, why data was not being collected, and what key barriers to data collection exist, etc. A blank survey is attached as Appendix D. Additionally, The "survey PMF#" column in the table included in Appendix G contains the relevant PMF numbers for all of the surveys that were returned competed.

Surveys were distributed to all INAC Assistant Deputy Ministers along with a letter asking that they ensure the proper program administrators fill out one survey for each RMAF. As our group received completed surveys back, we analyzed the information programs provided on the data they were currently collecting in comparison to the data collection commitments listed in the PMP of the relevant RMAFs.

Chapters three and four of this report list and explain the conclusions our group has developed with regards to steps three and four. Section three describes the results of our analysis of the RMAFs we collected, and section four describes the results of the Program Manager Surveys that were returned.

Chapter Two: RMAF Quality and Completeness

2.1 Findings Based on Overall RMAF Scoring

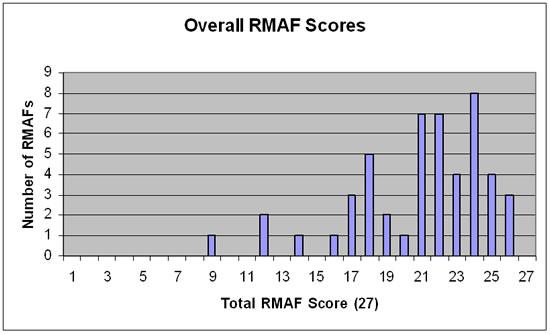

Nine components of each RMAF were evaluated on a scale of one to three. As such, the lowest possible overall score that an RMAF could be given was nine while the highest score was 27. The chart below shows the number of RMAFs that received each overall score.

Our key findings based on the overall scoring are listed below.

- The average overall score was 22 (out of 27).

- The majority of RMAFs were clustered with overall scores between 21 and 26.

- Only one RMAF received the minimum score of nine.

- Those RMAFs that received a low score (less than 20) tended to exhibit the following common characteristics: missing sections, missing components within sections, underdevelopment of sections / lack of detail, and lack of clarity.

These findings seem to indicate that overall, the quality of RMAFs produced within the department tends to be acceptable. The clustering of most RMAFs within the 21-26 range implies that RMAFs generally meet the minimum Treasury Board Secretariat guidelines, while some improvements in specific areas could be made.

2.2 "Objectives / Planned Results / Logic Model" Overall Findings

The first three sections of the RMAF evaluations looked at the stated program Objectives, the program Planned Results, and the RMAF Logic Model. The program Objectives section is a description of what the program is trying to achieve. The Planned Results section outlines the expected immediate, intermediate and ultimate outcomes that the program expects to have influenced. The Logic Model shows how the program links its activities, outputs, outcomes, and objectives together.

- The evaluation of the Objectives was based on the usual 3-point scale, and the review criterion was that the objectives be stated clearly.

- The evaluation of the Planned Results section was broken into two sub-categories that were each reviewed on the 3-point scale. The criterion for the first sub-category was that Expected Outcomes be logical and connected to each other and the objectives. The review criterion for the second sub-category was that the Expected Outcomes be realistic and achievable.

- The evaluation of the Logic Model was also on the 3-point scale, and the criteria was that the model be clear and well articulated (logical flow).

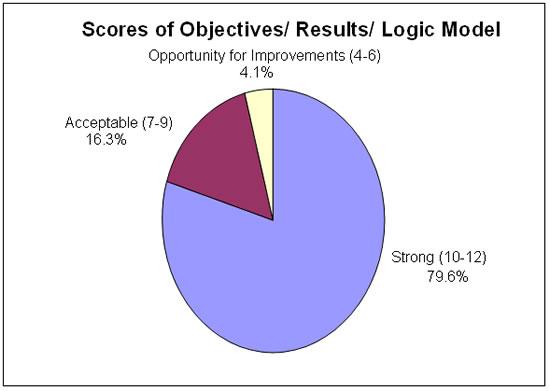

As these sections are all intrinsically linked, they are aggregated in this findings section. The findings are described in the chart below on a 12-point scale (four evaluated sections on a 3-point scale). A total score of 10-12 is considered "strong", a score of 7-9 is considered "acceptable", and 4-6 is considered "opportunity for improvement".

The results of our evaluation of the aggregate "Objectives / Planned Results / Logic Model" sections for the 49 RMAFs are depicted in the chart below:

- Almost 80% of RMAFs were within the "strong" range for the "Objectives / Planned Results / Logic Model" sections.

- Only two RMAFs received "opportunity for improvement" scores within this aggregate category.

- The most commonly identified problems with RMAFs that received less than "Strong" scores were the following: the use of "directional" outcomes, lack of clarity in Logic Model, unclear presentation of outcomes (no defined section), outcomes not directly linking to a Strategic Outcome, and listed outcomes actually being outputs.

Our findings indicate that these components of INAC's RMAFs are being consistently well done across the Department. This is illustrated by the fact that almost 80% of RMAFs received "Strong" scores in this aggregate category. This confirms what one would expect: programs usually have a good grasp of what they are trying to achieve (Objectives), and how they plan to do it (Planned Results). These should then be easily integrated into a clear and logically flowing program model (Logic Model). An inability to clearly articulate its Objectives and Planned Results may point to a larger systemic problem within a particular program.

2.3 Performance Measurement Plan Findings

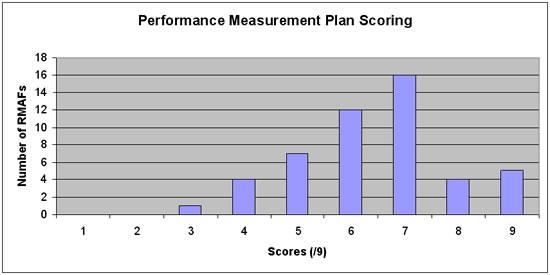

One of the central purposes of an RMAF is to provide a Performance Measurement Plan for each of INAC's programs. These PMPs are required to include a series of performance indicators which can be used to gauge program success. Furthermore, the PMPs are required to provide a solid data collection plan to ensure that the appropriate information is being collected to measure program success.

To assess the quality of the PMPs in existing RMAFs, our team assessed each PMP on a scale of 1-9. This score was based on the combined score of three of the related categories of the aforementioned review criteria which were evaluated on a scale of 1-3. The results of our evaluation of the PMPs found in the Department's RMAFs are illustrated in the graph below.

As the above graph suggests, the majority of the PMPs we assessed contained a significant amount of useful information. However, there were important ways in which the plans were consistently weak.

In order to clearly explain our team's findings concerning the areas of weakness in the existing PMPs across INAC, this section of our report will present our analysis of the plans in two sub-sections. The first sub-section will discuss the quality of the performance indicators found in the RMAFs and the second sub-section will discuss the quality of the proposed data collection plans.

2.3.1 Performance Indicators

The development of useful performance indicators is one of the most important ways for programs to help promote successful results-based management. The RMAF should be viewed as a valuable tool that can be used to help clarify program goals and develop useful, precise and measurable performance indicators that can be used to gauge program success. While the majority of the RMAFs included some useful performance indicators, we identified several common problems that undermining the usefulness of the PMPs as management tools. The most important problems that we identified in the 49 RMAFs that we studied were:

- Many RMAFs included far too many indicators

Several of the RMAFs contained more than 40 performance indicators. In some instances, the list of performance indicators went on for several pages. Although there were usually some useful indicators to be found in these RMAFs, they were "buried" underneath so many less-useful indicators that it was often difficult for us to identify them. Program managers attempting to use them would probably encounter the same problem. Out of the 49 RMAFs studied, we found that 19 (39%) of them included too many performance indicators for a manageable performance measurement strategy.

- The problem of too many indicators was particularly prevalent for programs with many different outcomes

Programs with a large number of discrete activity areas and a large number of unrelated outcomes were more likely to include an unmanageably large number of performance indicators in their RMAFs. Some RMAFs, such as the National Child Benefit Reinvestment RMAF, the Labrador-Innu Comprehensive Healing Strategy RMAF, and the Renewal of the Urban Aboriginal Strategy RMAF, have several different activity areas and therefore require indicators to measure success towards several different and sometimes unrelated outcomes. The result is that these sorts of programs, most of which are relatively small (financially), tended to have several pages of performance indicators. This created PMPs that were complicated and generally unmanageable for these programs. This finding highlights the need that programs, with a large number of outcomes and discrete activity areas, become selective in the development of performance indicators and choose only those which will be most helpful in gauging program performance in a particular activity area.

- Insufficient attention to outcomes rather than outputs

Our analysis of the department's existing RMAFs showed that a large number of program PMPs fail to properly emphasize a commitment to the measurement of progress towards program outcomes as opposed to monitoring outputs. One common problem was that in many instances there were too many listed performance indicators that were output-focused. The large number of output-related performance indicators, such as the number of meetings held or the number of documents produced often made the PMPs unmanageably large without adding to the program's ability to gauge success. Of the 49 RMAFs that were analyzed, our team found that 20 (41%) of the Performance Management Plans were overly focused on outputs and would have benefited from a clearer focus on measuring progress towards program outcomes.

- Many performance indicators were vague and difficult to measure

While the majority of the RMAFs that we analyzed contained some useful, measurable performance indicators, several of them also included indicators that were very vague and extremely difficult to accurately measure. Some RMAFs, for example the Initiatives Under the Youth Employment Strategy, contained many indicators that rely on the "perceptions" of participants concerning whether or not particular outcomes have been achieved. While this sort information may be useful, it should not be the basis of a Performance Measurement strategy. Our team found that 18 (37%) of the Performance Management Plans that we studied placed too much emphasis on vague performance indicators and would benefit from a greater focus on precise, measurable progress. It is important for the developers of RMAFs to ensure that the PMP is solidly rooted in a series of performance indicators that are precise, measurable, and relatively easy to collect and interpret data for.

The PMP component of the RMAF holds the potential to be an extremely useful management tool. Currently, although most programs have relatively solid PMPs, there are several areas we have identified in which improvement is still needed. By focusing on the development of management strategies which are manageable in size, focused as much as possible on measuring progress towards outcomes, and rooted in precise, measurable performance indicators, INACs programs can help ensure that their RMAFs function as useful management tools.

2.3.2 Proposed Data Collection Plan

As part of the evaluation of the PMP, the Data Collection Plan was also evaluated. The key review criteria was that the "data sources and frequency of data collection are clearly identified". The RMAF evaluations identified a number of issues pertaining to the RMAFs Data Collection Plans.

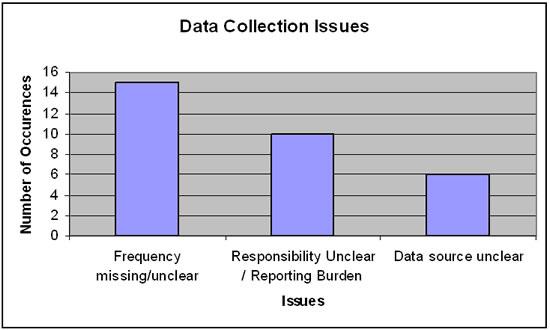

The chart below depicts the most common issues raised:

- Frequency missing/unclear

15 of the 49 RMAF evaluations mentioned that the Data Collection Plans were either unclear in their description of the frequency of the data collection, or the frequency was not indicated.

- Responsibility unclear / reporting burden

In ten instances, RMAF evaluations mentioned that the responsibility for data collection was not clearly expressed. This failure to clearly identify responsibility for data collection is a serious issue for INAC to address as it can often lead to one of two undesirable scenarios. If it is unclear who is responsible for data collection it is possible that no party will collect the necessary data, making successful performance management difficult. A second possibility is that if responsibilities are unclear, more than one organization will spend time and resources collecting identical data. Considering the heavy reporting burden that already faces First Nations on reserve, who are responsible for a significant portion of data collection, it is important to eliminate redundancy and inefficiency in data collection wherever possible. By clearly identifying responsibilities for data collection in RMAFs the programs can help promote effective, efficient program management while helping to lessen the reporting burden facing First Nations.

- Data source unclear

In six instances RMAF evaluations indicated that the data sources to be used in various elements of the data collection strategy was not clearly identified. It is an obvious requirement that the source of important data should be clearly stated.

2.4 Evaluation Plan Findings

All RMAFs are required to include an "Evaluation Plan" which describes when the program is to be evaluated and provides information designed to assist evaluators in determining how to best assess program performance. Evaluation Plans are expected to include: key evaluation issues, data sources, a data collection strategy, a preliminary methodology and an estimated cost for the evaluation. As part of our effort to provide a comprehensive assessment of the quality of the department's existing RMAFs, our team examined the Evaluation Plan in each RMAF and assigned each Evaluation Plan a score according to the three point scale described in the methodology section of this report.

The results of our analysis of the quality of existing RMAF Evaluation Plans were:

- 45% of the RMAFs had "strong", detailed Evaluation Plans that included all of the required components.

- 37% of the RMAFs had acceptable Evaluation Plans that provided significant amounts of useful information but failed to include one or more required components.

- 18% of the RMAFs either failed to provide an Evaluation Plan at all or provided a very minimal and/or vague Evaluation Plan with few specific details.

Having sorted the Evaluation Plans into these three categories, our team next analyzed the Evaluation Plans which did not receive a perfect score in order to identify the most common omissions. Our key findings were:

- The RMAFs that received a grade of "opportunity for improvement" either contained no Evaluation Plan at all or provided an extremely minimal plan which would be of little use to evaluators.

- The most common omission found in the RMAFs that we scored as "acceptable" was that they failed to include a detailed methodology that could be used to inform future evaluations. Of the 18 RMAFs that received a grade of "acceptable," 9 of them either did not include a methodology or provided insufficient detail to help inform future evaluations.

- Six of the 18 RMAFs that received a score of "acceptable" failed to provide adequate preliminary "evaluation issues".

- Evaluation Plans that received a grade of "acceptable" generally omitted one or more required components which are intended to provide specific information about the planned evaluation. Of these 18 RMAFs, 4 did not include the data sources to be used, 4 did not include a data collection strategy and 6 did not provide an estimate for the cost of the proposed evaluations.

While most of the Evaluation Plans that we studied included significant useful information that will be helpful for evaluators, our analysis points to several possible areas of improvement that will help RMAFs to function as an effective performance management tool. Of particular importance is the aforementioned failure of a large number of RMAF Evaluation Plans to include useful methodologies and evaluation questions. In order for RMAFs serve as useful tools for facilitating the measurement of program performance, it is important that RMAFs be more consistent in their inclusion of proposed evaluation issues and thorough preliminary evaluation methodologies.

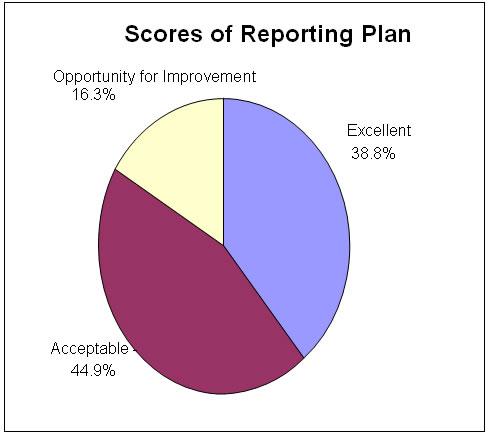

2.5 "Reporting Plan" findings

The final category of the evaluations focused on the programs' strategies for reporting their performance data to the department at large. Key characteristics of an acceptable reporting plan include: clear descriptions of the nature and scope of the plan, responsibility for the preparation of the plan, the reporting frequency, and the intended user(s) or readers of the reports.

The Reporting Plans were evaluated on the 3-point scale that is described in the "Methodology" section found above. The results of our evaluation of the Reporting Plans for the 49 RMAFs are depicted in the chart below:

Our overall findings for the evaluation of the Reporting Plans are listed below:

- Almost 84% of RMAFs received a score of "acceptable" or "strong" on their Reporting Plan.

- Few RMAFs received "opportunity for improvement" rating for their Reporting Plan.

- For those RMAFs that received a score of 1 (opportunity for improvement) or 2 (acceptable) the most common problem indicated was missing components. For example; the plan did not outline who the intended users are, or the plan did not clearly indicate the reporting frequency.

The findings indicate that the Reporting Plans are generally of acceptable quality. While some improvement is needed to ensure that all necessary components are included, very few of the plans did not meet minimum requirements.

2.6 Conclusions

The RMAF is, potentially, a very useful tool for facilitating effective, results-based program management. Our analysis concluded that, while most existing RMAFs are of acceptable quality and contain significant useful information, there are several areas which are generally in need of improvement. In order for future RMAFs to serve their purpose as effective management tools, we identified the following key issues that the department should seek to address:

- Many RMAFs (38%) contain too many performance indicators. Reducing the number of performance indicators will allow for the development of manageable PMPs.

- Performance indicators are still too focused on outputs rather than outcomes. By ensuring that indicators are aligned with outcomes, programs can ensure that they are appropriately measuring progress towards the program's stated objectives.

- There is a need to ensure that program data collection strategies are clear and comprehensive. To ensure that all necessary data is being collected and to prevent duplication in the collection of data, RMAFs must clearly identify responsibilities for data collection. RMAFs must also clearly state the data sources to be used and the frequency of collection to ensure effective, efficient data collection.

- The Evaluation Plans included in RMAFs should consistently include preliminary evaluation issues and proposed evaluation methodologies.

- The Reporting Plans contained in existing RMAFs are generally well done. In order to further improve the reporting plans it is necessary to ensure that all necessary components are consistently included.

Chapter Three: Status of RMAF Implementation

3.1 Rationale

A component of this study was to investigate the status of RMAF implementation for programs across INAC. Data collection commitments for programs as stated in their respective RMAFs were assessed by comparing responses from program surveys to the PMPs found in the program RMAFs. In evaluating the status of RMAF implementation, we also aimed to identify gaps and duplications in data collection.

3.2 Approach

In June 2008, we developed a survey (see Appendix D) to identify the extent to which RMAF PMPs were being implemented. Surveys were distributed to Assistant Deputy Ministers who, in turn, requested all program administrators to fill out the survey. Upon completion, survey responses were analyzed and major findings were reported by our team. In reporting on the general trends, we found it useful to aggregate survey responses in the following manner:

- "Few" refers to the same response by 1-3 surveys.

- "Some" refers to the same response by 4-6 surveys.

- "Many" refers to the same response by 7 or more surveys.

3.3 Profile of the Survey Responses

As of the first week of August 2008, we received 44 responses to our survey from program administrators. 26 of the responses were actual surveys adequate enough for us to analyze (see Appendices G for the survey results). In one case multiple surveys were received for a single RMAF, so the 26 surveys corresponded to 22 RMAFs. The remaining 18 responses did not include a completed survey, instead indicating that a survey could not be completed for one of the following reasons:

- Many of the RMAFs in the initial inventory were outdated. Approved several years ago, the RMAFs in this group either had been replaced by an updated RMAF, or corresponded to programs that were no longer active.

- Some RMAFs had been completed so recently that a survey could not be filled out for them. For some of the programs in this group, implementation had not yet begun. Also included in this category were programs that were updating or awaiting final approvals for their RMAFs before they began implementation of their performance measurement strategies.

- No response was received for many RMAFs. One possible explanation for this is that Program administrators are not always aware that their programs are covered by an RMAF. It is also possible that some of the RMAFs for which we received no response fall into the outdated or too recent categories as well. Finally, surveys may not have reached proper parties. Program managers may have been on vacation.

- We also received a few surveys for RMAFs that were not included in our original inventory. These RMAFs were approved by the Treasury Board after the April 2008 cut off date of the Treasury Board Submissions that we reviewed. Because they were received well-after our RMAF quality and completeness analysis (Chapter II) had been completed, they were not included in that portion of the project. However, their surveys were included in data collection analysis that follows.

A graphical description of this breakdown is provided below.

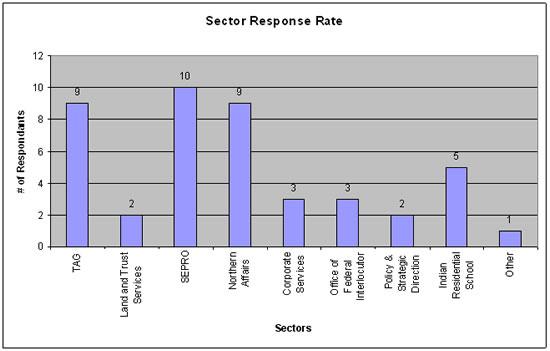

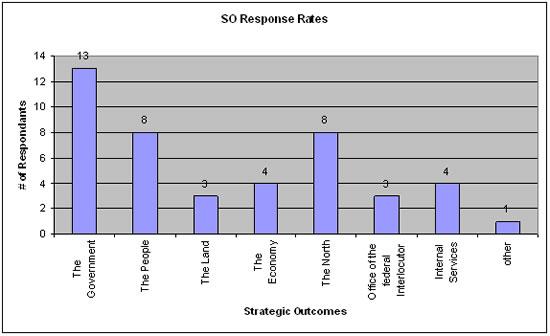

Responses were received from all sectors, although at slightly different rates. Breakdown of the 44 program responses received by sectors and SO can be graphically represented as follows:

3.4 Specific Findings

Our key findings derived from our analysis of the program surveys are as follow:

- Data is being collected for 42.9% of all performance indicators listed in the 22 RMAFs that were examined in this part of the project.

The 22 RMAFs for which we received surveys included a total of 492 performance indicators. Of these 492 performance indicators, the corresponding surveys indicated that data was being collected for 211 performance indicators in total (42.9%). Notably, the surveys revealed that data was being collected for an additional 27 indicators not listed in the respective program RMAFs [Note 2]. A list of all indicators included on returned Program Manager Surveys is included in Appendix H.

- Of all the indicators for which data is being collected, 83.6 % are said to be used (199 out of 238 collected indicators).

The most commonly reported reason for non-use was that data for indicators was still being collected. Nevertheless, it appears as though most of the data collected for the indicators is being used. Furthermore, respondents indicated that data was most commonly used for annual and/or quarterly reporting, and departmental and other performance reports.

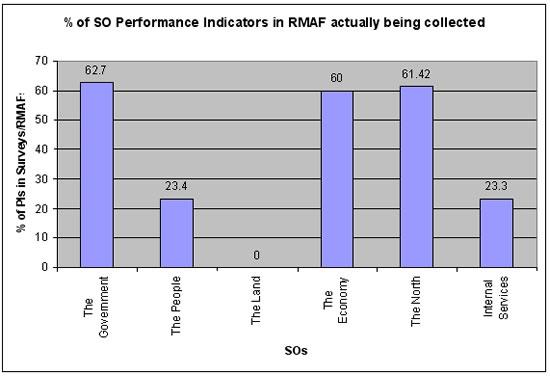

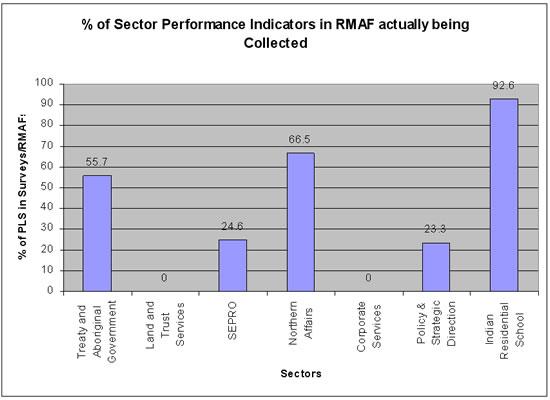

- When surveys and their corresponding RMAFs are grouped according to strategic outcomes, data is being collected for performance indicators in the following manner:

- When surveys and their corresponding RMAFs are grouped according to sectors, data is being collected for performance indicators in the following manner:

- Many respondents perceive that data requirements are currently being met.

When asked "To what extent is the data identified in RMAF's Performance Measurement Framework (PMF) currently being collected?" (Question #1) 18 out of 26 respondents indicated that it is between 75% and 100%. This indicates that perhaps program mangers are overestimating the implementation of their RMAF's PMF.

- Many of the respondents perceive the data being collected as clearly demonstrating effectiveness of their programs.

When asked "Is the data being collected sufficient to provide an accurate picture of the effectiveness of the program?" (Question #3), 82% of the respondents indicated that they do.

3.5 General Findings

Trends in our analysis point to three general findings which contribute to a better understanding of performance measurement and reporting at INAC.

- Finding #1: Overall, data collection based on RMAF PMPs is far from complete.

According to the surveys we received, only 42.9% of the performance indicators listed in the RMAFs are actually being collected. This is a troubling statistic. If the required indicators are not being collected, there may be insufficient information to determine whether or not a program is successful. Paradoxically, in light of the low data collection rate, only a few of the survey respondents indicated that they believe that the data being collected was insufficient "to provide an accurate picture of the effectiveness of the program." One possible explanation is that programs are only collecting the important performance indicators. As noted in Chapter 3, many RMAFs include an unnecessary number of "output-based" indicators that will not be particularly helpful in determining the effectiveness of a program. Interestingly, for those RMAFs that break down their performance measurement indicators by output/activity, immediate outcome, intermediate outcome, and final outcome, a lesser percentage of intermediate and final outcome aligned indicators was being collected than output/activity and immediate outcome aligned indicators.

It is important to note that these values and percentages may be inaccurate. Many programs are only able to collect intermediate and final outcome data in the later stages of the program implementation. As such, it is possible that the proportion of these indicators included as being collected in Program Manager Surveys was skewed downwards. While the data does not clearly indicate that program managers are simply declining to collect less useful output-oriented data and instead focusing on more valuable outcome oriented data, this may still be the case.

The low data collection rate and several written comments indicate that is that original data collection plans are often modified by program administrators over time to better represent program activities. Indeed, several survey respondents indicated that the initial PMPs laid out in their program RMAFs and PMPs had been or were in the process of being revised.

On one hand, it is encouraging to see that programs are flexible enough to make the RMAFs work for them. Adapting the PMP may demonstrate a program's ability to recognise new circumstances and evolve in accordance over time to better represent the program's outcomes. One program wrote that "we are working to improve the quality of data so it can provide us with better evidence of the characteristics of our clients, the degree to which our programs meet their needs and a more accurate picture of program effectiveness." Considering how new RMAFs are to line departments, it is not surprising that many programs may have written their PMPs without full knowledge of the consequences of these plans and are therefore revising them as they implement them over time.

On the other hand, this gap between commitments and actual data collection may demonstrate that programs are revising the criteria they are being held accountable for without approval from either Audit and Evaluation Sector or Treasury Board Secretariat. This would render performance measurements of these programs null.

- Finding #2: There is a lack of capacity within INAC and among First Nations to collect data.

One of the most prevalent comments that appeared in returned surveys was that there is insufficient capacity to collect all of the performance indicators listed in the RMAF. This is likely the most important reason that data collection coverage is so low. Limited capacity of both INAC and Stakeholders was reported to be a problem. This lack of capacity includes, but is not limited to, insufficient resources, low ability among some First Nations to fill out the data collection forms, lack of internet access for data reporting, lack of INAC personnel to perform data analysis, and a lack of information systems to assess the data. For instance, one response explained,

"There are three barriers [to effective data collection]:

a) We don't have the most relevant indictors and the data collection systems associated with them;

b) First Nations don't have the capacity to collect data;

c) We don't have the capacity at HQ and Regions to collect and analyze the data being provided by First Nations."

Another wrote, "The barriers to more effective data collection are: not wanting to add to the reporting burden of First Nations, the availability of data the program wants to collect, as well as no information system to collect data, store and analyze data."

Even if the data is being collected, these factors can lead to an inability to utilise the data and monitor performance in addition to lags and gaps in the reporting of these performance measurements.

- Finding #3: No obvious data collection overlap.

One of the hopes for this project was that overlapping data collection commitments and collection activities would be identified. If we had determined that many different programs were collecting the same data, there would be room for streamlining to save resources and reduce the reporting burden on First Nations. Unfortunately, our team was unable to locate any significant areas of overlapping data collection. The most important reason for this was that most performance indicators are program specific – for example, RMAFs dealing with climate change will measure the reduction to greenhouse gas emissions that occurred as a result of upgrades to power plants funded by the program, rather than the total change to greenhouse gas emissions. One second possible reason for this is that so few indicators are being collected. If 100% rather than 42.9% of indicators were being collected, it is possible that overlap would become a problem. Finally, one very interesting possible explanation for the lack of overlapping data collection is that Program Managers are already refraining from collecting some possibly overlapping data in order to reduce the reporting burden on First Nations. Indeed, some Survey respondents indicated the reporting burden to be a reason why data for all indicators were not being collected.

Chapter Four: Conclusion

This report has listed and explained the main findings of the RMAF Special Study project. When we compared INAC's RMAFs to the Treasury Board Secretariat RMAF guidelines, we find that overall, their quality was generally high. Nevertheless, we found that there remains substantial room for improvement in the quality of RMAFs. Most importantly, too many RMAFs include far too many indicators, focus too much on outputs and too little on outcomes, include unclear or incomplete data collection strategies, and little information on proposed evaluation issues and methodologies.

With respect to data collection implementation, it appears that less than half of the performance indicators listed in RMAFs are actually being collected. Insufficient stakeholder and INAC capacity is likely the most important factor causing the low level of data collection. Additional possible sources of low data collection are the avoidance of what are perceived as unhelpful indicators and attempts to reduce the First Nation reporting burden. There do not seem to be significant opportunities to further reduce that reporting burden by eliminating overlapping data collection efforts, as no such overlap was identified.

The comparatively small problems with RMAF quality seem much less serious than the extremely opportunity for improvement data collection coverage. However, it should be noted that the problems may be related. Some of the most troubling aspects of RMAF quality was the very large number of indicators for many RMAFs, and the very large number of output rather than outcome oriented performance indicators. If indicators were fewer and more narrowly focused on what matters, it could become easier to collect a larger proportion of performance indicators.

In conclusion, one thing is absolutely clear: the RMAF's potential is only realized when it is used as the management tool it was designed to be. Implementation is only the first step in this. Just as importantly, the department must take the insight that RMAFs and their Performance Measurement Strategies offer seriously. To date, this has been difficult because of the scarcity of information available on RMAF quality and coverage within the department. As such, the most valuable aspect of this study is not in its conclusions, but rather in the very large quantity of raw information that is appended to this report. This information should be kept up to date and extended so that ongoing performance monitoring and evaluation can increasingly benefit from the wealth of information that well-designed and well-implemented RMAFs provide.

Recommendation

The RMAF Project Final Report submitted on August 13th made a variety of conclusions regarding RMAF quality and implementation at INAC. This document is intended to supplement the final report by providing a few concrete recommendations for how INAC's use of RMAFs could be improved.

1. Performance Measurement Plans (PMPs) should contain short lists of performance indicators that are direct, outcome-oriented, precisely defined, and collectable.

There are five parts to this recommendation:

- PMPs should contain a short list of performance indicators. Long PMPs suffer from lack of focus and are difficult to implement. Reducing the total number of indicators will force program administrators to select the most effective indicators and give a less diluted picture of overall program efficacy.

- Indicators should be direct. Proxy measures (for example, measuring perceptions of outcomes rather than outcomes) are substantially less valuable than indicators designed that directly measure whether or not the objectives of an initiative have or have not been met.

- Indicators should be outcome-oriented. Indicators that focus on outputs and activities do little to show how effective a program has been, and only dilute the overall usefulness of a PMP.

- Indicators should be precisely defined. It should be absolutely clear what in indicator will measure and how the necessary data will be collected. Opportunity for improvemently defined indicators only frustrates those responsible for data collection, and necessitates revisions to the PMP.

- Indicators should be collectable. The value of the information indicators provide should be weighed against the cost and feasibility of allocating resources to data-collection efforts. Any indicator that can or will not be collected should be excluded from the PMP.

It appears that currently, only a small proportion of the performance indicators that RMAFs list are being collected. Improving the focus and quality of PMPs while decreasing their size will help give a less diluted assessment of overall program performance, increase the overall rate of data collection, ensure that the most essential indicators are always collected, and reduce the strain on data-collection resources of INAC and First Nations.

2. INAC should track the status of RMAF development and implementation.

RMAFs are intended as tools to be used in on-going program management. In spite of this, until now, it has been impossible to keep track of what programs do and do not have RMAFs, whether RMAFs are still active, whether they have been revised, and whether they are being implemented. A near complete lack of information on the current status of RMAF makes it all but impossible for them to be used in an on-going manner.

While responsibility for implementing and keeping RMAFs current ultimately rests with the implementing sector, it is not surprising that some RMAFs get lost in the shuffle when many managers are liable to encounter interest in their RMAF only twice – once when the program authorities come up for approval or renewal, and once when the program is evaluated. Mechanisms to monitor – and when necessary, correct – the status of RMAF implementation must be developed. The first step towards doing this successfully is simply keeping an up-to-date record of the status every INAC RMAF.

3. Engaging Program Managers

Our surveys results indicate that Program Managers are very concerned with the quality of their programs and the experience of the clients they serve. However, the limitations they face (including time and resource constraints as well as lack of capacity) negatively impact their ability to carefully plan for performance measurement and evaluation, as well as to monitor programs and collect data. Improving their understanding of how RMAFs are a useful program management tool and building their capacity for strategic planning could improve RMAF quality and facilitate their implementation in the future.

Areas for Further Investigation

The project participants have identified the following areas additional research would be informative.

1. Ongoing Project Maintenance

The most useful aspect of the project may be the extensive information gathered on INAC's RMAFs and their implementation. This information should be kept up to date.

- RMAFs should be added to the RMAF inventory as they are approved by the Treasury Board.

- Inquiries should be made as to implementation, renewal, and expiry arrangements as RMAFs in the inventory age.

- RMAFs for which the current status remains unknown should be investigated.

2. The Role of Consultants in RMAF Development

During the study, it became clear that many of the RMAFs examined had been developed by consultancy firms rather than the sectors that would eventually be responsible for their implementation. This may mean that RMAF implementers are not as familiar with their RMAFs as they should be, and/or that the RMAF authors were not as familiar with the realities of program implementation as they should have been. It may be possible to identify those RMAFs that where developed outside of the department, and cross-reference this information with RMAF quality and degree of implementation.

3. Mapping RMAFs by Program Authority

It remains unclear what percentage and what portions of INAC's overall spending is covered by RMAFs. An investigation into RMAF coverage by program authority would be necessary to make progress on this front.

Additionally, linking up with other sectors of INAC (for example, Finance) that also have an interest in this area would be useful in carrying out the next steps of the project.

Appendix A: RMAF Special Study Terms of Reference

Context

The purpose of this study is to contribute to the Evaluation, Performance Measurement, and Review Branch's annual commitment to review performance-monitoring and results-reporting within the Department of Indian and Northern Affairs. This will be accomplished by assessing the quality and implementation of Results-Based Management Accountability Frameworks (RMAFs) within the Department. Furthermore, this study will contribute to preparations for the Strategic Review of 2009-10 by reporting on the state of performance measurement in the department through the lens of RMAFs. The findings from the study will be presented as a final report to the Evaluation team.

Approach

Four steps are required to complete this final report. First, the study requires collecting all existing RMAFs by reviewing all Treasury Board Submissions by the Department since 2000.

The second task is to "map out" (correlate) the collected RMAFs against the 2009-2010 INAC Program Activity Architecture (PAA). RMAFs currently under development will also be considered if possible. By examining which activities and sub-activities on the PAA are covered by an RMAF, the department can better understand the state of accountability and performance measurement within the department. To compliment this assessment, some study participants are also mapping audits and evaluations conducted to date by the A&E sector.

The third task is to determine the quality of the collected RMAFs, and the performance measurement tools they describe to determine their effectiveness. RMAFs will be evaluated against the requirements set out by the Treasury Board Secretariat. The logic and usefulness of performance indicators will also be assessed.

In addition, this study will verify whether the commitments stated in RMAFs are currently being implemented. Hence, the fourth task is to determine whether data is actually being collected by the programs based on their RMAF commitments. This will be done via surveys to the programs. The collected information will be matched against the information from relevant RMAFs. With this knowledge, the department can identify where the gaps or duplications in the data exist and continue to work towards decreasing reporting burdens imposed on First Nations.

Methodology

This study will perform the following:

- An RMAF inventory (completed June 3rd; the team collected approximately 50 RMAFs):

- Identify all existing RMAFs and create an inventory list by:

- examining all INAC Treasury Board Submissions since 2000;

- exploring the Audit and Evaluation Sector's master authority table;

- meeting with members of the evaluation team.

- Identify all existing RMAFs and create an inventory list by:

- An RMAF Map: (to be completed by end of June)

- Use the PAA to determine where RMAFs fit into the PAA of the department.

- A review: (to be completed by end of June).

- Assess the quality of the RMAFs using the template based on Treasury Board criteria.

- Take note of data collection commitments listed in each RMAF.

- Determine the State of RMAF Implementation: (July)

- Verify data collection commitments are being fulfilled.

- This is to be done using a survey written by the study team, but distributed to programs by senior members of the evaluation team.

- Determine where there are gaps or duplications in the data collected across the department.

- Verify data collection commitments are being fulfilled.

- Final Report: (to be completed by August 7th)

- Draft a final report and presentation materials describing findings.

- Brief the evaluation team on August 7th.

Key Deliverables

- A written report consisting of (to be completed by August 7th):

- executive summary;

- background (description of the project, RMAF requirements & purpose, etc.);

- RMAF and program evaluation coverage at INAC;

- data collection, gaps and duplications;

- notable observations; and

- Future directions.

- Useful raw data and working documents (accompanied by brief explanations to facilitate interpretation if necessary) such as:

- treasury Board submissions inventory table;

- RMAF inventory table;

- RMAF evaluation forms;

- Data indicators tables; and

- Survey to programs results.

Project Management

- Each person is responsible for developing an RMAF inventory of their assigned SO

- Each person will be responsible for a set number of RMAFs to map and evaluate.

- Group members in Toronto will focus on mapping completed audit and evaluations to the PAA to complement findings from the RMAF review.

- Individual group members will be given specific additional responsibilities (liaising with the members of the evaluation team, chairing meetings, taking minutes, etc.)

Appendix B: Treasury Board Secretariat RMAF Guidelines

Preparing and Using Results-based Management and Accountability Frameworks

Appendix C: RMAF Review Template

| Reader | |||

| RMAF #: RMAF Title |

|||

| TB Submission #(s) | |||

| Date Approved | |||

| SO/ PA/SA/ SSA | |||

| Objectives and Expected Results | Review Criteria | Score (1-3) 1 incomplete; 2 meets requirement but needs work; 3 meets all requirements |

Explanation for the Score Given |

| Objectives |

|

||

| Planned Results |

|

||

|

|||

| Logic Model |

|

||

| Evaluation | Review Criteria | Score (1-3) | Explanation for the Score Given |

| Performance Measurement Plan |

|

||

|

|||

|

|||

| Evaluation Plan |

|

||

| Reporting | Review Criteria | Score (1-3) | Explanation for the Score Given |

|

|||

Appendix D: RMAF Special Study Survey to Program Managers on Data Collection

RMAF Title:

Sector:

Strategic Outcome / Activity / Sub-Activity:

Your Name and Title:

Contact Information:

1. To what extent is the data identified in the RMAF's performance measurement framework (PMF) currently being collected? (All performance indicators for this program are outlined in the PMF section of the RMAF) (please check a box):

__ 0-25%

__ 25-50%

__ 50-75%

__ 75-100%

__ Do not know

2. Please specify, in the table that follows: (and expand if necessary to provide complete information):

- the indicators only for which data is currently being collected;

- how often data is collected for each indicator;

- How the collected data is being used. (e.g. Quarterly Reporting, DPR, etc.)

| Performance Indicators for which data is currently and consistently being collected | Frequency/cycle of the data collection (annual, quarterly, etc) | Do you currently use this data? (Y/N) | If you use the data, how is this data currently being used (i.e. what is the reporting format used)? | If you do not use this data, please specify why. |

|---|---|---|---|---|

Additional Questions:

3. Is the data being collected sufficient to provide an accurate picture of the effectiveness of the program?

4. If data for indicators listed in the RMAF are not being collected, why is this the case?

5. What are some of the barriers to more effective data collection?

Additional Comments (optional):

Footnotes

- For the sake of readability, this report often refers to a score of 3/3 as "strong", a score of 2/3 as "acceptable", and a score of 1/3 as "opportunity for improvement". (return to source paragraph)

- These figures should be considered approximations. At times, it was difficult to determine whether a given indicator should be counted as a single indicator or as multiple indicators. In addition, the way in which performance indicators were phrased in the surveys was not identical to the way it was originally stated in the respective RMAFs. As such, judgement was occasionally required when attempting to identify the same indicators from surveys and their corresponding RMAFs. (return to source paragraph)